Sometimes, PowerShell is slow, especially when you are dealing with a large amount of data, but there are ways of speeding things up depending on what you are doing. This post will focus on how to speed up loops, arrays and hash-tables. All metrics were gathered in Windows 10 1909 PSVersion 5.1. Lets start with the summary.

Summary

- Pre-initialize your arrays if possible. Instead of adding things to your array one at a time, if you know how long your array needs to be, create it at that length and then fill it.

- If you do not know what length the array will be, create a list instead. Adding objects to lists is far faster than adding to an array.

- If you need to do random lookups on a set of data, consider sorting your Array/List and then call BinarySearch()

- Avoid searching by piping an array to Where-Object, either turn it into a hashtable, or sort the array and use BinarySearch()

- When adding items to a hash-table, use the Add() function

- When looping through objects, consider using a normal foreach(){}

Arrays and Lists

Now for the actual metrics. You can find the scripts used under each section. For this section, we’ll look at arrays/lists. First, creating and filling.

Most methods of creating and filling arrays are fairly similar. The only noticeable slowdown is if you use PowerShell’s native array, and do not pre-initialize it. This is because on the back-end, whenever you add to the array, the computer effectively re-creates the entire array with each add.

| Name | Method | Time (MS) per 10000 iterations |

| Native Array | $PSArray = @(); $PSArray += $i; | 1738.6903 |

| Initialized Native Array | $PSArray = @(0)*$Iterations; $PSArray[$i]=$i; | 28.6651 |

| Initialized .Net Array | $PSArray = [int[]]::new($Iterations); $PSArray[$i]=$i; | 26.2101 |

| .Net List | $PSArray = [System.Collections.Generic.List[int]]::new(); $PSArray.Add($I); | 22.6374 |

Now on to read performance. In this test, I am just using a simple .Contains() check. While the performance does vary depending on the type of array, we are sub 1-second for 10000 iterations. This is not noticeable to humans. The only noticeable difference is if you pipe your array to Where-Object for searching. That took 11 minutes! If you really need speed though, sorting your array and using BinarySearch is the way to go.

| Name | Method | Time (MS) per 10000 iterations |

| Native Array Contains | $PSArray.Contains($i) | 177.2726 |

| .Net Array Contains | $PSArray.Contains($i) | 38.632 |

| .Net List Contains | $PSArray.Contains($i) | 87.9633 |

| .Net List BinarySearch | $PSArray.BinarySearch($i) | 23.1007 |

| Native Array with Pipe Filtering | $PSArray | Where-Object {$_ -eq $i} | 680831.936 |

Lets look take a look at hash-tables. Hash-tables are useful as they allow you to assign a key to an object, and then query that quickly at a later time. When adding to hash-tables though, the computer has to make sure the key being added is unique to the hash-table. This has a noticeably negative effect when using the Native PowerShell hash-table. At 22 seconds for 10000 items added to the hashtable, this is still do-able for most scripts. That said, if you add more items to it, it just keeps getting slower. A quick and easy change is to use the Add() function instead of the $HashTable += @{} pattern. If you do that, then there is no real performance difference between the native PowerShell hashtable and a .Net Dictionary.

| Name | Method | Time (MS) per 10000 iterations |

| Native Hashtable | $PSArray = @{}; $PSArray += @{$I.ToString()=$I}; | 22859.229 |

| .Net Dictionary | $PSArray = [System.Collections.Generic.Dictionary[string,int]]::new(); $PSArray.Add($I.ToString(), $I); | 30.5428 |

| Native Hashtable with Add Function | $PSArray = @{}; $PSArray.Add($I.ToString(),$I); | 32.5752 |

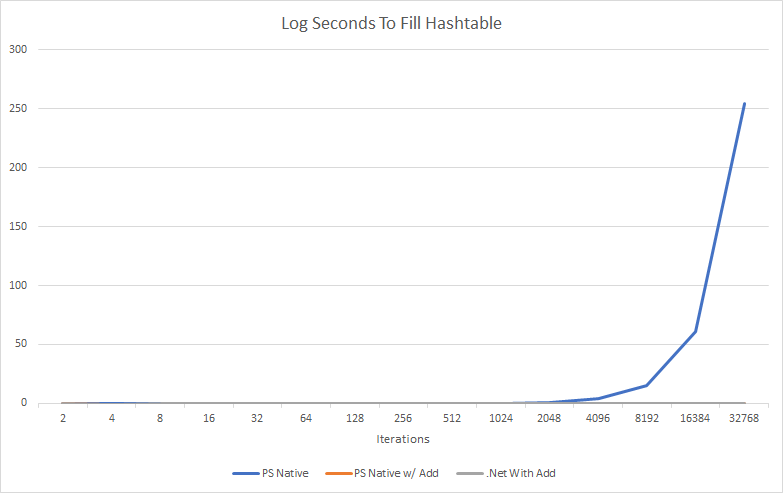

To exemplify how slow hastables can get the more items you add, I charted it out.

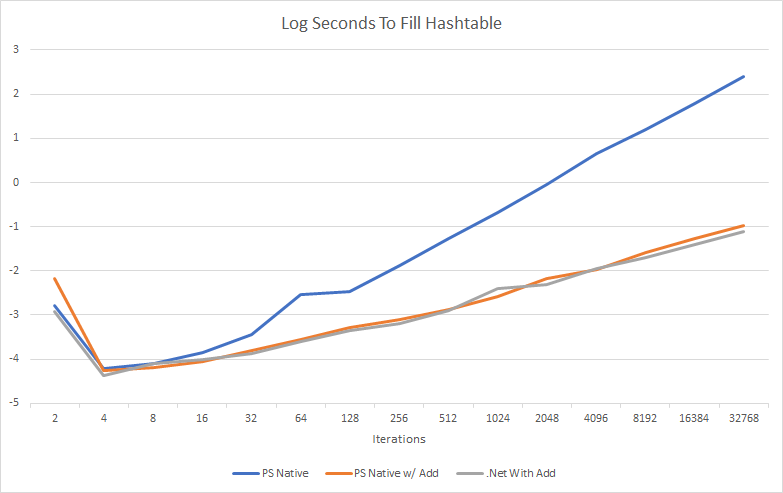

Well, that is not super useful is it. All it shows is using the $HashTable += @{} is so slow, the other methods don’t even register. Lets look at that in log10 scale.

Definently make sure you use the .Add() function for any large hash-table!

For reading hash-tables, I just checked how quickly keys could be searched. Both .Net and the native method of creating hash-tables were suitably fast.

| Name | Method | Time (MS) per 10000 iterations |

| Native Hashtable Contains | $PSArray.ContainsKey($i) | 31.041 |

| .Net Hashtable Contains | $PSArray.ContainsKey($I) | 21.0664 |

Scripts used for metrics gathering and the Excel sheet used to create charts.

Loops

Finally lets look at loop performance. If you need to perform some action on every item in a collection, you have several options. It would take large arrays to notice much of a difference in which method you use, but in my tests, using a foreach(){} loop outperformed all other methods and piping a collection to foreach-object {} had the worse performance.

| Name | Method | Time (MS) for 100000 iterations |

| For Loop | for ($i =0;$i-lt $iterations;$i++){…} | 142.6532 |

| Foreach Loop | foreach ($item in $myarray) {…} | 62.4789 |

| Piping to foreach-object | $myarray | foreach-object {…} | 389.7384 |

| .ForEach function | $myarray.ForEach{…} | 160.2419 |

Scripts used to pull these metrics